GPUs are the engines of AI. But do you truly understand how they function and why they're so costly? Let me break it down for you.

Today I'll cover:

- An Introduction to GPUs

- The Basic Principles of GPU Functionality

- Comparing GPUs in AI Training and Inference

- AI Supercomputers

- Selecting the Appropriate GPU for Your Business Needs

- Balancing Cost Optimization and Performance

- The Future of AI Computers and Chips

Let’s Dive In! 🤿

An Introduction to GPUs

The driving force behind the AI revolution is the massive computational power required for training and inference tasks. GPUs have emerged as the powerhouse for AI applications. They were initially designed for rendering graphics and video tasks. I still remember buying my first cards for my PC to play videogames. However, their architecture, parallel processing capabilities, and speed have made them ideal for accelerating AI workloads.

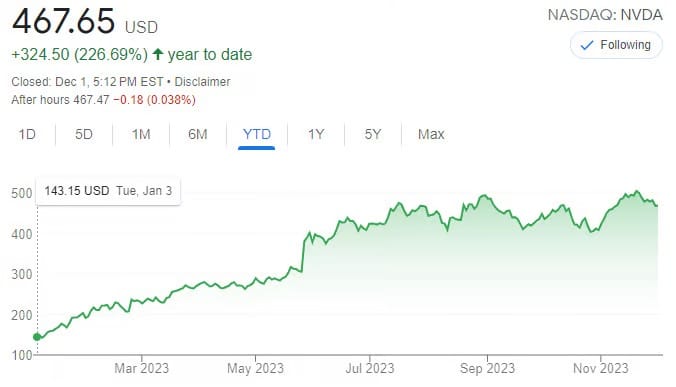

In the dynamic world of GPU technology, several prominent vendors have established themselves as leaders, each contributing their unique innovations and solutions to power the AI revolution. These vendors include Nvidia, AMD, and Intel. The adoption in enterprise is being reflected in their stock price, for example Nvidia with a 226% price increase this year-to-date.

The Basic Principles of GPU Functionality

These are some of the main characteristics:

- GPU Architecture: GPUs are specialized hardware with thousands of small processing cores designed for parallel computing. Those cores are organized into Streaming Multiprocessors (SMs), each containing dozens of smaller GPU cores.

- Parallel Processing: More GPU cores allow greater parallel processing power for suitable workloads with abundant parallelism. GPUs excel at performing multiple calculations simultaneously, ideal for handling large datasets and complex mathematical operations.

- Speed and Performance: GPUs operate at incredible speeds, significantly reducing the time required for training complex neural networks. Rapid switching between threads and tolerance for high memory latency allow GPU cores to achieve high utilization even when threads stall.

- Support for AI Frameworks: GPU manufacturers have created specialized models optimized for popular AI frameworks like TensorFlow and PyTorch.

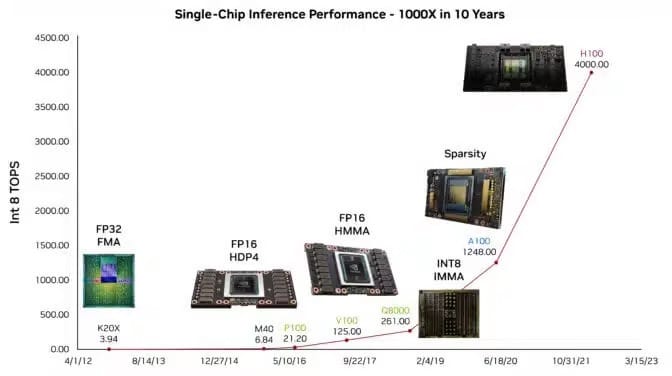

- Accelerating AI Research: GPUs have played a vital role in speeding up AI research, leading to breakthroughs in various applications. Dramatic hardware performance gains have enabled advances in generative AI models like ChatGPT. Further speedups will continue driving machine learning progress.

Nvidia is a pioneer in the GPU industry and has played a pivotal role in advancing GPU technology for AI applications. Their GPUs, such as the A100 and H100 series, are known for their exceptional performance and support for AI frameworks. Nvidia's CUDA platform has become a standard for GPU-accelerated AI development, making it a go-to choice for researchers and developers.

Comparing GPUs in AI Training and Inference

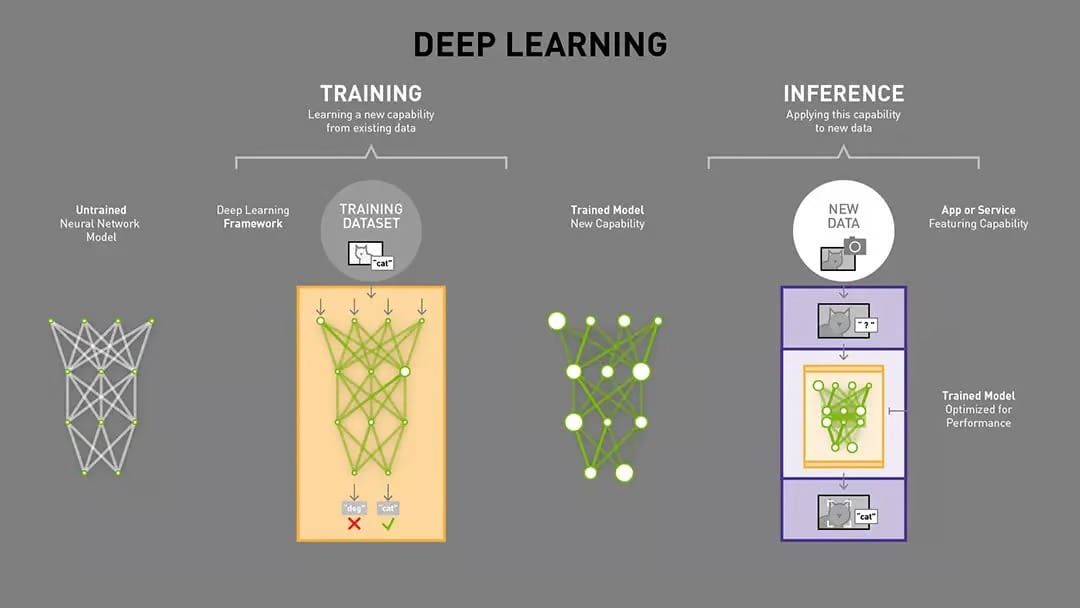

When it comes to AI, GPUs play a vital role in both training and inference. First, let’s talk about what is training vs inference:

- Training: it involves feeding a neural network with vast amounts of data to learn and make predictions. Training feeds that data through layers which assign weights to inputs based on the task. Weights are adjusted through many iterations until the network produces consistently accurate results. It is very compute-intensive, requiring immense amounts of math operations. For example, GPT-3 needed 300 zettaflops across its training.

- Inference: on the other hand, inference is the application of a trained model to make predictions on new data. Inference allows near-identical accuracy as training but simplified and optimized for speed. It enables real-time AI applications.

The parallel processing capability of GPUs brings 10-100x speedups for AI workloads over CPU-only systems.

AI Supercomputers

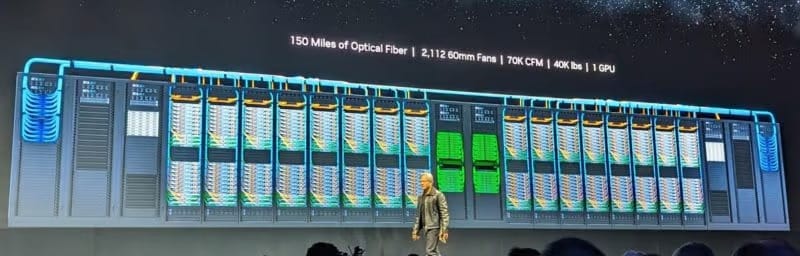

AI supercomputers have garnered significant attention for their ability to accelerate AI research. These systems often consist of clusters of GPUs working in tandem, creating enormous computing power. Notable examples include Summit and Sierra at the Oak Ridge National Laboratory, and Fugaku in Japan. These supercomputers are advancing scientific research, drug discovery, and climate modeling, illustrating the immense potential of GPUs in AI applications.

To facilitate rapid communication and data transfer between compute nodes, AI supercomputers employ high-performance interconnect technologies. These interconnects ensure low-latency and high-bandwidth communication, allowing for efficient parallelization of AI tasks. A good example is NVLink technology from Nvidia, which provides enables the conection of AI computing nodes.

A vast array of top supercomputing centers globally are integrating GPU’s chips to create extremely powerful next-generation systems focused on advancing AI.

Selecting the Appropriate GPU for Your Business Needs

Selecting the right GPU for your AI needs is a crucial decision. Consider the following factors:

- Workload Focus: Identify whether your primary GPU usage is for AI training, inference, or both, aligning your choice with your workload demands.

- Budget Balance: Consider your budget constraints while striking a balance between GPU performance and cost-effectiveness.

- Compatibility Check: Ensure GPU compatibility with existing hardware and software infrastructure, whether you're opting for in-house or cloud solutions.

- Scalability Planning: Plan for future scalability by choosing GPUs that can easily accommodate growing computational requirements, whether on-premises or in the cloud.

- Technical Specs Analysis: Examine GPU specifications such as core count, memory, bandwidth, and clock speeds to match your specific needs.

- Specialized Features: Look for GPUs with features that align with your workload, like tensor cores for AI or ray tracing for graphics.

- Vendor Ecosystem: Consider GPU vendor support, including software libraries, tools, and support services. Cloud providers often offer a vendor-neutral environment.

- Cloud-Based Versus In-House: Decide between cloud-based GPU services for flexibility and scalability or in-house solutions for complete control and potential cost savings.

- Performance Evaluation: Evaluate GPUs based on real-world performance benchmarks, considering your use case, whether in the cloud or on-premises.

- Energy Efficiency: Factor in the energy efficiency of GPUs to reduce operational costs, whether you're using them in-house or in the cloud.

- Availability and Procurement: Be aware of GPU availability and lead times, and adapt your procurement strategy accordingly, whether you opt for cloud or in-house solutions.

By condensing these considerations and including a focus on cloud-based or in-house solutions, you can make a more efficient and informed choice for your business's GPU needs.

Balancing Cost Optimization and Performance

Balancing cost optimization and performance is a key challenge in GPU selection. It's important to strike a balance between the computational power you need and the budget you have available. Consider exploring cloud-based GPU services like AWS, Azure, IBM Cloud or Google Cloud for flexibility and cost-effectiveness. These services allow you to scale your GPU resources up or down as needed, reducing upfront hardware costs.

- Set clear cost-efficiency targets based on your business needs.

- Consider the total cost of ownership (TCO), including operational expenses.

- Evaluate scalability and future-proofing to avoid frequent hardware upgrades.

- Decide between cloud and in-house GPU solutions for flexibility and control.

- Match GPU choice to your workload, focusing on specific optimizations.

- Assess energy efficiency for long-term operational savings.

- Review performance benchmarks relevant to your use case.

- Explore vendor options for the right price-performance ratio.

- Monitor GPU resource utilization to optimize allocation.

- Conduct financial analysis to weigh upfront costs against potential ROI.

The Future of AI Computers and Chips

The future of AI hardware is characterized by key trends:

- Specialized AI Chips: Dedicated AI processors like TPUs will deliver unmatched performance and efficiency.

- Quantum Computing: Quantum AI algorithms will revolutionize complex problem-solving.

- Neuromorphic Computing: AI chips mimicking the brain's architecture will lead to human-like AI interactions.

- Edge AI and IoT: Integration will reduce latency and enhance privacy in AI inference.

- Exascale Supercomputers: These supercomputers will power complex AI research and simulations.

- AI-Driven Chip Design: Machine learning is already optimizing chip layouts and materials for efficiency.

- AI Hardware Standards: Standardization will ensure interoperability and foster innovation in AI hardware.

Wrapping up

GPUs have become the backbone of AI, enabling faster and more efficient processing of data for training and inference. Selecting the right GPU for your business needs, balancing cost optimization and performance, and addressing challenges are essential steps in harnessing the power of GPUs for AI applications. As technology continues to advance, GPUs will remain at the forefront of AI innovation, driving progress in numerous industries.

I hope this GPU 101 post was useful to help you understand the landscape.